INTRODUCTION

Malaria disease is one of the leading causes of morbidity and mortality in sub-Saharan Africa (World Health Organization (WHO), 2015). The disease accounted for about 60% outpatient visits to health facilities in Nigeria in 2015 (Burlando et al., 2014; Fana et al., 2015). Delivering effective and quality health care to patients have been reported as one of the main approaches to overcoming the problem (Federal Ministry of Health (FMOH), 2015; Ofori-Asenso and Agyeman, 2016; WHO, 2015). These practices are dependent on many factors, including among others, the practitioners’ knowledge on the disease and regimen, attitudes toward and treatment practice during the management of patients (Eke et al., 2014; Liu et al., 2015).

The use of knowledge, attitude, and practice (KAP) instrument in cross-sectional surveys have been widely reported, especially in social sciences and public health to assess behavior-related attributes (Creswell, 2014; Hlongwana et al., 2009; Launiala, 2009). However, a review on malaria-related KAP studies showed that most of the instruments were not validated (Bolarinwa, 2015). In order to effectively assess health care professionals’ KAP in primary health care (PHC) facilities, there would be a need for reliable and valid instruments containing appropriate items that captured the desired attributes, and these could be ascertained by subjecting such instruments through rigorous validation processes as initial step for such study (Müller et al., 2015; Parsian and Dunning, 2009) using appropriate test theory approach, including the classical test theory (CTT) (Müller et al., 2015).

Various CTT approaches have been used for validation of survey instruments, including exploratory, confirmatory, and reliability methods (Agarwal, 2011; Bolarinwa, 2015; Müller et al., 2015). The exploratory factor analysis (EFA) is useful in situations where the link between the observed and latent variables is unknown or uncertain (Van Der Eijk et al., 2015), while the confirmatory factor analysis (CFA) is useful when the researcher has some knowledge on the underlying latent variable structure (Hamad et al., 2016; Hsieh and Shannon, 2005). Therefore, EFA is considered as more of a theory-generating procedure, while CFA as theory-testing procedure (Agarwal, 2011; Bolarinwa, 2015; Gie Yong and Pearce, 2013; Hamad et al., 2016; Liuzhan, 2014; Shapiro, 2007). In addition, model fitness has been determined using different indicators for goodness-of-fit, such as Chi-square test, root mean square error of approximation (RMSEA), standardized root mean square residual (SRMR), and others including normed fit index (NFI), non-NFI (also known as Tucker-Lewis Index), incremental fit index, and comparative fit index which all have different ranges of the values (Kline, 2015; Sivo et al., 2006).

According to CTT, person’s observed scores in a test is the sum of a true score (error-free scores) that would be obtained if there were no errors in measurement, and an error score (Müller et al., 2015; Peters, 2014), and the reliability cannot be quantified directly because it is impossible to measure the true scores (Gie Yong and Pearce, 2013). Instead, Cronbach’s alpha test is used in estimating the internal consistency of the set of items in test instruments (Müller et al., 2015). Similarly, the stability (test–retest reliability) of the instrument could be ascertained by administering the same instrument to respondents for two or more times at different time’s intervals and the correlation between the collected dataset estimated (Field, 2009; Joke et al., 2014). The main purpose of this study was to develop and evaluate the validity and reliability of patients’ self-reported instrument for KAP studies.

MATERIALS AND METHODS

For clarity, the instrument development and validation processes were organized and described in four subsections including instrument development, face and content validation, construct validity, and reliability tests based on the format described by Worthington and Whittaker (2006).

Ethics and consent approval

Approval for the conduct of this study was granted by the Joint Research Review and Ethics Committee, Research Management Centre (RMC), MAHSA University, Malaysia (Ref. number: RMC/EC01/2016; Dated 25/11/2016). This approval was subsequently used to obtain permission from Plateau state MOH, Jos, Nigeria, and the directors of PHCs of the various selected local government areas (LGAs) prior to the data collection. In addition, the purpose of the study was explained to the prospective participants through “study participants” informed consent forms’ and those who consented to participate in the study indicated by signing the form.

Instrument development

Sources and selection of the variables

Based on the goal of the study, the first stage of the instrument development involved generation of variables list that best represented patients’ KAP related to uncomplicated malaria management. Both electronic search of the databases (Medical Subject Headings, Medline, the Web of Science, Embase, Global Health, and Google Scholar) and manual search of references of relevant studies published between January 2005 and December 2016 were carried out to identify articles containing information on KAP. Terms and phrases like “patients’ knowledge on uncomplicated malaria and medication,” “patients’ attitudes toward uncomplicated malaria and medication,” “patients’ uncomplicated malaria medication practices,” “patients’ knowledge, attitudes and practices on uncomplicated malaria and medication,” and “patients’ KAP on uncomplicated malaria” were systematically searched.

Few previous studies related to KAP on uncomplicated malaria ailments in Nigeria published in English language peer-reviewed journals were identified; however, there were scanty information on the reliability and validity of such instruments (Adetola et al., 2014; Edet-Utan et al., 2016; Jimam et al., 2015; Orimadegun and Ilesanmi, 2015; Uchenna et al., 2015). To ensure that all the relevant variables were used in the design of proposed draft KAP instrument, Nigeria and WHO malaria treatment guidelines were also used as the sources of the variables (FMOH, 2015; Hsieh and Shannon, 2005; WHO, 2015).

The identified variables were carefully selected and used in constructing the relevant statements in a simplified way that could be easily understood by the prospective respondents so that it would encourage them to willingly give their responses as recommended by Gasquet et al. (2004). Two researchers independently reviewed the extracted variables after which they met and agreed on the appropriate variables to be used for items generation.

Item generation and presentation

The variables were used in generating statements/items for the instruments using polytomous scoring formats, such as three-point scale using “yes,” “not sure,” or “no” for knowledge-related items. Five-points scale using terms like “strongly agree,” “agree,” “neutral,” “disagree” and “strongly disagree,” and “very often,” “often,” “sometimes,” “rarely,” and “never” were used to describe how strongly respondent feels about the attitudinal and practice-related statements, respectively (Burns et al., 2008). In the end, a drafted patients’ self-reported KAP scale known as “patients’ knowledge, attitudes, and practice instrument for uncomplicated malaria (PKAPIUM)” containing 31 items was developed for pilot-testing.

Description of the draft PKAPIUM instruments

The draft PKAPIUM scale was divided into four subsections, namely, socio-demographic characteristics of respondents, knowledge, attitudes, and practices.

The socio-demographic subsection consisted of six items that inquired some basic information on patients, including gender, age, marital status, level of education, occupation, and monthly income.

Subsection 2 contained eight items drafted for assessing patients’ knowledge on the disease, including the cause, transmission, sign, and symptoms, as well as the recommended anti-malarial drugs used in the treatment of the ailment. Their levels of correct responses to the eight items were assessed using three options of “no,” “not sure,” or “yes” which were scored as 0, 0, or 1in that order.

The third subsection had 13 items for assessing respondents’ attitudes toward uncomplicated malaria and management. The magnitude of their attitudes was assessed on five-point Likert’s scale with scores ranging from 1 = strongly disagree, 2 = disagree, 3 = neutral, 4 = agree, and 5 = strongly agree.

The last subsection of the draft PKAPIUM scale consisted of 10 items presented in five-points Likert’s scale format for the evaluation of patients’ general practices during uncomplicated malaria management, and their responses were also scored on five-point Likert scale as 1 = never, 2 = rarely, 3 = sometimes, 4 = often, and 5 = very often.

Face and content validity study

Content validity of an instrument could be qualitatively and quantitatively determined using experts in the field (Ayre and Scally, 2014; Sangoseni et al., 2013). The qualitative aspect (face validity) could be considered an extension of comprehensive variables selections and items generation/presentation (Saint-Maurice et al., 2014). It involved checking for the appropriateness of the constructed statements for each of the items relating to wordings, structures, orderliness, and scoring formats among other things (Creswell, 2014; Sangoseni et al., 2013). On the other hand, the quantitative approach involved the quantification of experts’ views on the content validity of the draft instrument (Devon et al., 2007). The content validity index (CVI) approach is one of the empirical methods for estimating the content validity of study instruments that have been widely used (Devon et al., 2007; Rodrigues et al., 2017). This step was important for producing an instrument that could measure the aforementioned patients’ characteristics with reproducible results. The necessity of the step was to ensure that the necessary items were included in the instruments, the items in the instruments were relevant considering the purpose of the study, and there was an appropriate balance of elements, without some of them been over or under-represented (Hu et al., 2012).

Sampling and sample size for the content validity study

Purposive sampling approach was used in selecting health care professionals who were experts (family medicine physicians, clinical and public health pharmacists) in the field of study. This sampling method was preferred in order to get the appropriate respondents with good knowledge and experience in the study area. The selected respondents were considered as “experts” because of their training background and experience in the management of ailments including malaria.

According to Lynn’s criteria, between 3 and 10 number of experts have been recommended as appropriate for running content validity studies (Ayre and Scally, 2014; Lynn, 1986). In the present study, selection of six experts was considered adequate in order to have control over the chance agreement, because previous studies have shown the probability of chance agreement decreased with more number of experts (Ayre and Scally, 2014).

Data collection for the content validity study

The experts were presented with the draft PKAPIUM instrument and requested to carefully study it and make their observations regarding grammatical errors, punctuations, the used of wordings in constructing the statements, correct order of arrangement, and scoring formats for the items. They were given three working days, after which the researcher went round and collated their inputs.

All the amendment on the 31-items’ draft instrument based on any suggestion from the experts were made after which it was again re-administered to the same six experts together with cover letter explaining the purpose of the study, the need for content validation of the research instrument, and the detail description of how to evaluate the items, and were requested to independently express their viewpoints regarding the contents of the draft PKAPIUM scale in terms of its’ relevance, clarity, simplicity, and comprehensiveness (Devon et al., 2007) on a short four-points’ Likert’s scale given to them. In rating for relevance on the four-points’ scale, one-point implied “not relevant,” two-points = “item need some revision,” three-points = “relevant but need minor revision,” and four-points = “very relevant” (Devon et al., 2007). The same approach was applied for clarity, simplicity, and comprehensiveness measures of the instruments’ contents, and their responses collated after 3 days for analysis.

Statistical analysis

The experts’ viewpoints expressed in the content validity forms were used to calculate content validity indices, including the items content validity index (I-CVI) and the scale content validity index (S-CVI) (Devon et al., 2007). I-CVI was calculated for each item in order to decide to accept or reject the item included in the instrument (Devon et al., 2007). It was estimated as the proportion of the number of experts who rated the item 3 or 4 to the total number of experts (Ayre and Scally, 2014; Zamanzadeh et al., 2015). Values for I-CVI ranges between 0 and 1, an item was considered relevant if the I-CVI > 0.79, need revision when value falls between 0.70 and 0.79, and the item was rejected when its I-CVI value was <0.70 (Devon et al., 2007).

The S-CVI is described as the average value of the I-CVI for the instrument and both the universal agreement (UA) within the experts (S-CVI/UA) and the average CVI (S-CVI/Ave) methods (Devon et al., 2007) were used to estimate the values. The use of S-CVI/UA involved adding the all the items with I-CVI of 1 and divided by the total number of items (Zamanzadeh et al., 2015), while the S-CVI/Ave was estimated by summing up the I-CVIs values and divided by the total number of items (Zamanzadeh et al., 2015). Values of S-CVI/UA ≥ 0.80 and an S-CVI/Ave ≥ 0.90 were considered excellent content validity (Ayre and Scally, 2014; Zamanzadeh et al., 2015).

Construct validity and reliability

During the study, the anonymity and confidentiality of the respondents were ensured by the researchers through the followings: (i) the study instruments were anonymous, (ii) respondents were asked not to write their names on the study instruments to avoid the possibility of tracking, (iii) no any code was used to identify any respondent, (iv) a collection box with lock and key was designed with a hole for inserting A-4 sized paper and respondents were asked to drop the filled instrument into it, and (v) the box was only opened and the completed instruments collated out for coding and screening for analysis after everyone had submitted.

Study location

The study was carried out in PHC facilities of Plateau state, north-central Nigeria. The choice of the state was based on the logistics of the first author’s on-going Ph.D. research. Plateau state is located between latitude 80°24ʹN and longitude 80°32ʹ, and 100°38ʹ East, with a population of 5,178,712 and occupies 30,913 square kilometers space [National Population Commission -National Malaria Control Program and International Classification of Functioning, Disability and Health (NPC-NMCP and ICF), 2012], with 17 LGAs distributed across three senatorial zones.

Study population and sampling method

The convenient sampling method was used to select six government PHC facilities across the state, with at least one facility from each of the three senatorial zones of the state, and only patients receiving treatment for uncomplicated malaria were recruited by simple random sampling technique to participate in the study.

Sample size calculation

The use of absolute sample size figures (Garson, 2008; Habing, 2003), and minimum ratios of a sample size to a number of items (Mundfrom et al., 2005; Pearson and Mundform, 2010) have been proposed in sample size estimation for factor analysis. The Monte Carlo simulation principle was used to arrive at minimum acceptable sample size of 300 which was considered to be adequate in achieving high communalities and good loading strength for the draft PKAPIUM scale (Hogarty et al., 2005; Muthén and Muthén, 2002; Pearson and Mundform, 2010; Stephenson and Holbert, 2003; Thoemmes et al., 2010; Wolf et al., 2013).

Similarly, sample size of 90 respondents also receiving treatment for the same disease was estimated for test re-test reliability studies by considering at least 10% of the study population of the main sample size of 300 (Yin et al., 2012), which was triple to overcome possible issues of poor patients’ compliance to participate in the study.

Data collection

The draft 31-items’ PKAPIUM scale was self-administered to 300 patients who were receiving treatment for uncomplicated malaria in the six selected PHC facilities in Plateau state who filled and returned same to the researchers.

This was followed by self-administration of the reduced version of the 21-items’ PKAPIUM scale on 90 selected patients who consented to participate in the study. With the cooperation of the staff in-charge of the PHC facilities, the same patients were asked to come back to the facilities after 14) days to fill the second sets of the same instrument. During this study, it was assumed that there were no significant changes in the respondents’ attributes within the 14-days period, and the 14-day gap was long enough that their memories of the first study did not influence the result of the second study. Some of the patients that demanded transport money for the return to PHC facilities were given not less than 100 naira (N100.00), Nigeria currency, each.

Data analysis

The data were manually entered into Microsoft Excel software based on appropriate coding and scoring formats and respondents’ acceptability of the instrument was checked by percentage of items unanswered and proportion of respondents that attempted all the items (Reito et al., 2017). Similarly, the suitability of the items’ inclusion in further analysis was checked by determining their floor and ceiling effects (Lim et al., 2015; Terwee et al., 2007) and items with worst or best patients’ scores of >15% were considered having significant (p < 0.05) floor or ceiling effects; also, items with large amounts of missing data (>10% non-response) were identified as having possibility of reducing the quality and usability of the instrument; hence, they were excluded from further analysis (Lim et al., 2015).

Descriptive statistic was used to present the socio-demographic characteristics of the respondents before assessing the validity, internal consistency and stability of the instrument by IBM® Statistical Package for Social Sciences (SPSS®) version 23, and AMOS™ (Analysis of Moment Structures) software version 22.00.

Before proceeding with factorial analysis, the adequacy of the sample size was checked through Kaiser–Meyer–Olkin (KMO) values for the minimum requirement of ≥0.50 (Tabachnick and Fidell, 2007; Thoemmes et al., 2010; Wolf et al., 2013). The “Kaiser’s eigenevalue-greater-than-one rule” was used to retain factors with values greater than one using varimax orthogonal rotation method so that the factor extracts could be transformed to improve the interpretation of the results (Gie Yong and Pearce, 2013; Ledesma and Valero-Mora, 2007; Suhr, 2006). The software was commanded not to display items’ loading strength values of < ±0.30, and the first extractions yielded many factors extracts that could not be of any useful meaning after interpretation; hence, the extraction was again carried out by fixing four factors to be extracted following the same process. Items with loading strength below the minimum value of ±0.30 and communalities of less than 0.50 were considered as having poor or no relationships with the factor and were considered for deletion (Field, 2009), after which the analysis was re-run again.

Furthermore, CFA was conducted to determine the structure and validity of the new PKAPIUM scale after the deletion using SPSS® AMOS™ version 22.00 (Hamad et al., 2016; Suhr, 2006). The maximum likelihood method was used in estimating the CFA parameters (Hamad et al., 2016; Kline, 2015).

The cross-loading and Fornell–Larcker criterion were used to estimate the convergent and discriminant validity of the scale (Henseler et al., 2015). The cross-loading approach estimation was based on the loading strengths between the various constructs and their respective items that were loaded to them in the EFA outputs; strong loading strengths suggested convergent validity, while weak associations between the same items and other factors were indications of discriminant validity (Henseler et al., 2015). Similarly, each construct’s average variance extracted (AVE) was estimated from the standardized regression weights of the items to their respective factors in the CFA outputs statistics; according to Fornell–Larcker criterion, values greater than 0.50 indicated convergent validity of the constructs (Hair and Lukas, 2014). In addition, the square root of the AVE for each construct and the whole scales were determined, with values greater than their respective correlation coefficients with other constructs were indications of discriminant validity of the constructs (Fornell and Larcker, 1981; Henseler et al., 2015). At least three goodness of fit indices including chi-square minimum discrepancy, divided by its degrees of freedom (CMIN/DF) value, the RMSEA, and SRMR were also used to confirm the fitness of the model (Kline, 2015; Sivo et al., 2006). An accepted CMIN/DF of ≤3.00 and p-value greater than 0.05 would be an indication of the model fitness; similarly, values of RMSEA and SRMR within the standard cut-off values of ≤0.08 to <0.10 for RMSEA and ≤0.09 (SRMR) would further confirm the model fitness (Kline, 2015; Sivo et al., 2006).

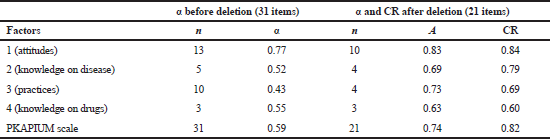

The internal consistency reliability of the PKAPIUM scale was assessed using the Cronbach’s alpha coefficient (α). In order to check the effects of Cronbach alpha’s limitations on the result (Shook et al., 2004), the composite reliability (CR) (average value of the items’ reliabilities) which has the ability of drawing on the standardized regression weights and correlation error measurement for each item (Shook et al., 2004) was also estimated from the standardized regression weights values of the items to their respective factors in the CFA outputs using CR calculator described by Raykov (1997). Although there may be variations in the values, the outcome will prove the presence or absence of consistency in the measurement results, and minimum acceptable values of between 0.70 and 0.79 have been recommended in both cases, with values falling between 0.80 and <0.90 to be more preferred (Hair and Lukas, 2014), and higher values of >0.90 were indication of possible items’ redundancy (Hair and Lukas, 2014; Müller et al., 2015; Nunnally and Bernstein, 1994). Values of at least 0.60 could also be acceptable since it was an exploratory investigation as recommended by Liu (2003).

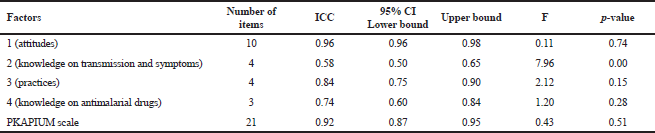

The test–re-test reliability over time was estimated by computing the ICC value at 95% confidence intervals (CIs) using SPSS® version 23 (IBM® Incorporated) based on mean-rating (k = 3), absolute agreement, two-way mixed-effects model (Vaz et al., 2013). An ICC value of between 0.79 and 0.89 indicated good reliability, while values ≥0.90 indicated excellent reliability of the scale (Koo and Li, 2016; Van Cauwenberg et al., 2014; Wong et al., 2012).

RESULTS AND DISCUSSION

Face and content validity

All the observations and suggestion rose by experts during the initial stage of qualitative evaluation of the draft instrument, especially regarding framing of the statements and responding options were of great importance at this stage and were appropriately noted, and where necessary, a revision was made on the instrument accordingly in order to improve the instruments’ validity (Devon et al., 2007).

The results of the content validity studies of the 31-items’ draft PKAPIUM scale (Table 1) was interpreted using Lynn (1986) approach. None of the 31 items had CVI (I-CVI) of less than 0.80 for relevance, clarity, simplicity, and comprehensiveness. The average CVIs (S-CVI) of relevance, clarity, simplicity, and comprehensiveness for the whole 31-items’ PKAPIUM scale based on the results of the UA within the experts (S-CVI/UA) were 0.97 (relevance), 1.00 (clarity), 0.90 (simplicity), and 0.90 (comprehensiveness); and the average CVI (S-CVI/Ave) approaches were 0.99 (relevance), 1.00 (clarity), 0.98 (simplicity), and 0.98 (comprehensiveness). On a general note, these results indicated excellent I-CVI (Devon et al., 2007; Lynn, 1986) with high percentage (90%–100%) of agreement on the acceptability of the content of the draft PKAPIUM scale among the panel of experts, which was an indicator that the instrument might be a good one for assessing patients’ KAP on uncomplicated malaria (Bölenius et al., 2012).

Construct validity

All 300 (100%) respondents filled and returned the instruments and most of them were female (65.0%) compared to 35.0% male populations with majority (30.3%) within the age brackets of 28- and 37-year old. About 58.7% of the respondents were not married, with 51.0% not on any source of income, which might be connected to the high percentages (42.0%) of them been students at secondary (34.7%) level. The inclusion of the respondents of varying socio-demographic characteristics was important because previous studies have reported their influence on how correct participant response to questions which by extension affects the validity of instrument (Emami and Safipour, 2013; Launiala, 2009).

The preliminary screening showed that the participants attempted all the items (100%) in the instrument, which implied its’ acceptability. The results of floor and ceiling effects revealed none of the items with more than 9% respondents selecting the highest or the lowest possible options, and this levels of responsiveness implied that the questions were neither too easy nor too hard for the respondents which could be a good signal of the quality of the instrument (Reito et al., 2017; Lim et al., 2015). In addition, none of the items had missing data of up to 10%; hence, all the items were retained for subsequent analysis. Furthermore, the KMO value of 0.76 indicated the adequacy of the sample population for factor analysis (Creswell, 2014; Field, 2009; Tabachnick and Fidell, 2007; Vaz et al., 2013). The outputs of correlation matrix showed existence of inter-relationships within the variables, which was confirmed by Bartlet’s test of sphericity (Chi-square = 1968.82, df = 210, p = 0.000), in addition, the high determinant scores of R-matrix (0.001) above 0.00001 indicated the absence of multicollinearity and singularity according to the rule of thumb (Field, 2009; Hair et al., 2006; Tabachnick and Fidell, 2007).

.png) | Table 1. Summary of content validity of the 31-item draft PKAPIUM scale (N = 6). [Click here to view] |

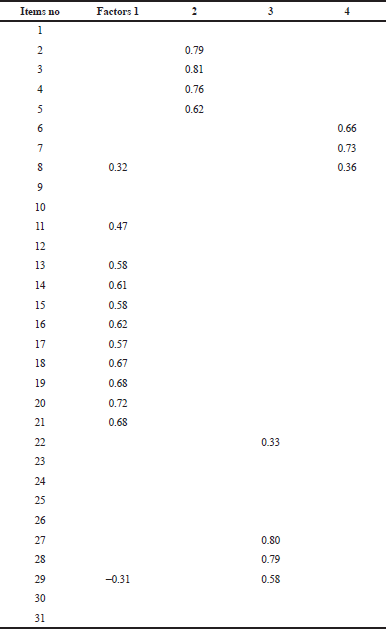

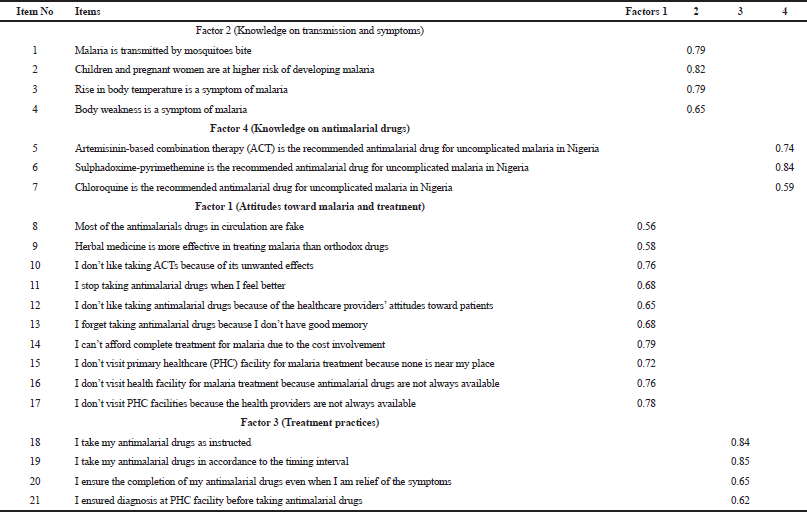

The result of the first-factor extraction on the 31-items’ draft PKAPIUM scale indicated 10 items (boldly marked) with loading strength below the minimum value of 0.30 and communalities of less than 0.50 (Table 2). Table 3 presented the four extracted factors with 21 retained items after deletion, with each factor containing a minimum of three items loaded to it with clear underlying themes which might contribute more to the quality of the instrument (Tabachnick and Fidell, 2007).

The observed regression weights of the items to their respective factors in the CFA outputs statistics agreed with the earlier hypothesized factor structures outlined in EFA, as explained through their convergent and discriminant validity, and goodness of fit indices.

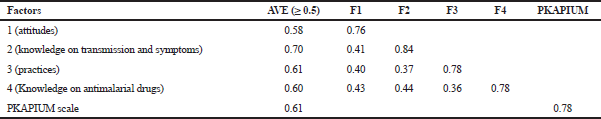

The AVE values for each construct and the whole scale were within 0.58–0.70, respectively (Table 4) which were all >0.50, suggesting convergent validities (Fornell and Larcker, 1981; Henseler et al., 2009), while the absence of strong relationships between the same items and other factors demonstrated the existence of discriminant validities according to the cross-loading criterion (item-level discriminant validity) (Henseler et al., 2015). Similarly, the square root of AVE value of the respective constructs were in the ranges of 0.76–0.84 and were greater than the correlation coefficient for each construct, hence, constructs and the scale’s convergent and discriminant validity were established based on Fornell–Larcker criterion (Fornell and Larcker, 1981; Henseler et al., 2015; Hair and Lukas, 2014), and it has been reported that discriminant and convergent validity leads to a better construct validity (Hair and Lukas, 2014; Liuzhan, 2014; Shapiro, 2007). These results showed that the constructs were really different from each other and were not measuring the same attributes, hence issue of multicollinearity was ruled out.

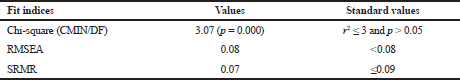

The goodness-of-fit results agreed with the adequacy of the hypothesized factor structure of the model (CMIN/DF = 3.07, p = 0.00, SRMR = 0.070, RMSEA = 0.08) (Table 5), indicating that the model was consistent with the respondents’ data because they were approximately within acceptable ranges. The observed significant chi-square measure indicated the levels of misfit of the model (Kline, 2015). This might be due to the possible limitations of chi-square sensitivity to high sample size (i.e., N > 200) (Kline, 2015; Sivo et al., 2006). This point was confirmed by the results of SRMR and RMSEA which were within the accepted limits of ≤0.090 and ≤0.08, respectively, and these fit indices are not affected by sample size variations (Kline, 2015). This result implied that the fitness of the 21 items to model was fair. It should also be noted that good model fit to data is not always implying that such a model is correct, however, good model fitness only indicates the plausibility of such a model (Kline, 2015).

Reliability analysis

The reliability of the draft scale (31-items) was low (Cronbach’s alpha = 0.59), while that for the new 21-items’ scale after deletion was better and acceptable (Cronbach’s alpha = 0.74) (Hair and Lukas, 2014), and this was confirmed by the estimated CR value of 0.82. Considering the internal consistencies of the subscales of the new instrument, the “attitudes toward uncomplicated malaria and treatment” was seen as the most reliable factor with high Cronbach reliability value of 0.83 which was followed by “treatment practice” (0.73), “knowledge on transmission and symptoms” (0.69), and “knowledge on antimalarial drugs” (0.63) being the lowest (Table 6). Similarly, the CR values for the constructs were in the range of 0.60–0.84. The observed slight variations in the internal consistency values through the two methods of estimation might be due to the fact that CR takes into considerations the different outer loading of the items to constructs, while the Cronbach’s alpha coefficients reliability assumed that all the items have equal outer loading on the constructs (Hair et al., 2017; Shook et al., 2004) although both values were within acceptable ranges since 0.60 could be considered for preliminary/exploratory investigations (Liu, 2003). In general, the Cronbach’s alpha and CR values might be the same or similar if the items measuring the same single construct have the same factor loading strength with zero covariances, on the contrary, the higher the fluctuations in loading strength among the items, the more the differences in the Cronbach’s alpha and CR values (Shook et al., 2004).

| Table 2. Factor structures and loading of 31-items draft PKAPIUM (N = 300) before deletion. [Click here to view] |

Out of the 90 sample size population recruited for the test–re-test reliability study on the 21-items’ PKAPIUM scale, 76 (84.4%) participated in the two phases of the study, and Table 7 showed that the outcomes were good [calculated intra-class correlation coefficient (ICC) = 0.92 (95% CI: 0.87–0.95), F = 43 (p = 0.51)]. For the factors, the calculated ICC for knowledge on disease was 0.58 (95% CI: 0.50–0.65), F = 7.96 (p = 0.000); knowledge on antimalarial drugs = 0.74 (95% CI: 0.60–0.84), F = 1.20 (p = 0.28); attitudes = 0.96 (95% CI: 0.96–0.98), F = 0.11 (p = 0.74); and practice = 0.84 (95% CI: 0.75–0.90), F = 2.12 (p = 0.15). The result showed that the ICC and upper bound of the 95% CI of the PKAPIUM scale met the minimum requirement (>0.9) for the reliability of an instrument to be considered as excellent (Van Cauwenberg et al., 2014; Vaz et al., 2013). The value was higher than the result of a similar study (0.86) conducted in Turkey (Alsaffar, 2012). In the case of the individual subscales that constituted the scale, attitude seemed to be better with excellent reliability with both the lower and upper bound of the 95% CI meeting the priori criterion for an instrument with excellent reliability (Koo and Li, 2016), which was followed by practice subscale whose ICC and the upper bound values were above the minimum value of 0.84 for an instrument’s reliability to be categorized as been good (Koo and Li, 2016; Van Cauwenberg et al., 2014; Wong et al., 2012). On the other hand, the knowledge on disease construct was the lowest with ICC value below 0.6 although the upper bound value was better (0.65), while the value for knowledge on drugs and the upper bound was good (Koo and Li, 2016). On a general note, the results suggested good levels of consistency of the subscales in measuring such variables over time. The results implied that there was a poor understanding of the respondents on malaria transmission and symptoms as shown by the low ICC value.

| Table 3. Factor structures and loading of 21 items in PKAPIUM after deletion (N = 300). [Click here to view] |

| Table 4. AVE, the square root of the AVE (bold) and correlations between constructs (off-diagonal) for four-factors’ PKAPIUM (N = 300) (21 items). [Click here to view] |

| Table 5. Fit indices for PKAPIUM (21 items) confirmatory factor model (N = 300). [Click here to view] |

| Table 6. Cronbach alpha (α) and CR of four-factors’ PKAPIUM scale (N = 300). [Click here to view] |

| Table 7. Test–retest reliability [intraclass correlation coefficients (ICC)] of the 21-items’ PKAPIUM scale (N = 90). [Click here to view] |

We wish to mention here that the study had some limitations as outlined below: the sample size was restricted to 300 which was considered the minimum for running factor analysis (Field, 2009), increasing the sample size in future studies might result in possible reduction in random errors which is one of the confounding factors affecting instrument validity, and this could further increase the validity and reliability of the scale and hence, its’ generalization in research. This study was carried out only on the patients who were receiving treatment for uncomplicated malaria, which implied that its usefulness in assessing patients receiving treatment for other disease conditions might be limited. There is a need for the use of the instrument in other disease conditions and severe malaria in addition to determining the criterion validity for the purpose of improving its quality and relevance. Furthermore, the instrument was mainly designed and validated for used on patients receiving treatment at the primary health care levels in Plateau state, who were mostly at the rural areas with low literacy levels and social amenities, which might influence their responses during the studies compared to those living in the urban areas where literacy levels higher and amenities are available and accessible. It may therefore not be a true representation of uncomplicated malaria patients across Nigeria and Sub-Saharan Africa because of variations in literacy levels, cultural beliefs and socioeconomic nature of the people. The use of the instrument in conducting the same study in different PHC facilities across the country and in higher health facilities is recommended to confirm its wider applicability.

CONCLUSION

The study showed the internal consistency, stability, and validity of the developed PKAPIUM instrument to be within acceptable ranges; hence, it could be considered acceptable for used in KAP-related surveys on uncomplicated malaria management.

ACKNOWLEDGMENTS

We appreciate all the staff of PHC facilities in Plateau state, Nigeria, for the assistance rendered throughout the study, and patients for agreeing to participate in the study.

FINANCIAL SUPPORT

None.

CONFLICT OF INTEREST

None.

REFERENCES

Adetola OT, Aishat LL, Olusola O. Perception and treatment practices of malaria among tertiary institution students in Oyo and Osun States, Nigeria. J Nat Sci Res,2014; 4(5):30–3.

Agarwal NK. Verifying survey items for construct validity : a two-stage sorting procedure for questionnaire design in information behavior research. ASIST, New Orleans, LA, 2011. CrossRef

Alsaffar AA. Validation of a general nutrition knowledge questionnaire in a Turkish student sample. Public Health Nutri, 2012; 15:2074–85. CrossRef

Ayre C, Scally AJ. Critical values for Lawshe’s content validity ratio: revisiting the original methods of calculation. Measure Evaluat Counsel Dev, 2014; 47(1):79–86. CrossRef

Bolarinwa OA. Principles and methods of validity and reliability testing of questionnaires used in social and health science researches. Nig Postgrad Med J, 2015; 22:195–201. CrossRef

Bölenius K, Brulin C, Grankvist K, Lindkvist M, Söderberg J. A content validated questionnaire for assessment of self reported venous blood sampling practices. BMC Res Notes, 2012; 5:39. CrossRef

Burlando A, Chukwuocha UM, Dozie IN, Crompton PD, Moebius J, Waisberg M, Zunzunegui MV. Malnutrition: the leading cause of immune deficiency diseases worldwide. Internat J Afr Nurs Sci, 2014; 3(3):4327–38.

Burns KEA, Duffett M, Kho ME, Meade MO, Adhikari NKJ, Sinuff T, Cook DJ. A guide for the design and conduct of self-administered surveys of clinicians. CMAJ, 2008; 179(3):245–52. CrossRef

Creswell JW. Research design, qualitative, quantitative, and mixed methods approaches. 4th edition, Sage Publications, USA, 2014.

DeVon HA, Block ME, Moyle-Wright P, Ernst DM, Hayden SJ, Lazzara DJ, Kostas-Polston E. A psychometric toolbox for testing validity and reliability. J Nurs Scholarsh, 2007; 39(2):155–64. CrossRef

Edet-Utan O, Ojediran T, Usman S, Akintayo-Usman NO, Fadero T, Oluberu OA, Isola IN. Knowledge, perception and practice of malaria management among non-medical students of higher institutions in Osun State Nigeria. Am J Biotech Med Res, 2016; 1(1):5–9. CrossRef

Eke RA, Diwe KC, Chineke HN, Uwakwe KA. Evaluation of the practice of self-medication among undergraduates of Imo. Orient J Med, 2014; 26(3–4):79–83.

Emami A, Safipour J. Constructing a questionnaire for assessment of awareness and acceptance of diversity in healthcare institutions. BMC Health Services Res, 2013; 13:145. CrossRef

Fana SA, Danladi M, Bunza A, Anka SA, Imam AU. Prevalence and riskfactors associated with malaria infection among pregnant women in a semi-urban community of north western Nigeria. Infect Dis Pov,2015; 4(24). CrossRef

Federal Ministry of Health (FMoH). National guidelines for diagnosis and treatment of malaria. 3rd edition, Federal Ministry of Health, Abuja, Nigeria, 2015. CrossRef

Field A. Discovering statistics using SPSS for windows. Sage Publications, London; Thousand Oaks; New Delhi, pp. 225–98, 667–82, 2009.

Fornell C, Larcker DF. Evaluating structural equation models with unobservable variables and measurement error. J Mark Res, 1981; 1:39–50. CrossRef

Garson DG. Factor analysis: statnotes. 2008 [Online]. Available via http://www2.chass.ncsu.edu/garson/pa765/factor.htm (Accessed 23 April 2018).

Gasquet I, Villeminot S, Estaquio C, Durieux P, Ravaud P, Falissard B. Construction of a questionnaire measuring outpatients’ opinion of quality of hospital consultation departments. Health Qual Life Outcomes, 2004; 2(43). CrossRef

Gie Yong A, Pearce S. A beginner’s guide to factor analysis: focusing on exploratory factor analysis. Tutor Quant Methods Psy, 2013; 9(2):79–94. CrossRef

Habing B. Exploratory factor analysis. 2003 [Online]. Available via http://www.stat.sc.edu/~habing/courses/530EFA.pdf (Accessed 15 May 2018). CrossRef

Hair JF Jr, Hult GTM, Ringle CM, Sarstedt M. A primer on partial least squares structural equation modeling (PLS-SEM). Sage Publications, Thousand Oaks, CA, 2017. CrossRef

Hair JF Jr, Lukas B. Marketing research. McGraw-Hill Education, Australia, 2014.

Hair J, Black W, Babin B, Anderson R, Tatham RL. Multivariate data analysis. 6th edition, Pearson Educational, Inc, New Jersey, 2006.

Hamad EO, Savundranayagam MY, Holmes JD, Kinsella EA, Johnson AM. Toward a mixed-methods research approach to content analysis in the digital age: the combined content-analysis model and its applications to health care twitter feeds. J Med Internet Res, 2016; 18(3):e60. CrossRef

Henseler J, Ringle C, Sinkovics R. The use of partial least squares path modeling in international marketing. Adv Int Market, 2009; 20:277–319. CrossRef

Henseler J, Ringle CM, Sarstedt M. A new criterion for assessing discriminant validity in variance-based structural equation modeling. J Acad Mark Sci, 2015; 43:115–35. CrossRef

Hlongwana KW, Mabaso ML, Kunene S, Govender D, Maharaj R. Community knowledge, attitudes and practices (KAP) on Malaria in Swaziland: a country earmarked for Malaria elimination. Malar J, 2009; 8(1):1–8. CrossRef

Hsieh HF, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res, 2005; 15(9):1277–88. CrossRef

Hu Q, Dinev T, Hart P, Cooke D. Managing employee compliance with information security policies: the critical role of top management and organizational culture. Decision Sci, 2012; 43(4):615–60. CrossRef

Jimam NS, David S, Musa N, Kadir GA. Assessment of the knowledge and patterns of malaria management among the residents of Jos metropolies. World J Pharm Pharm Sci, 2015; 4(6):1686–98.

Joke JG, Lidwine BM, Dirk LK, Jeroen JV, Kim JO. Reliability and validity of the C-BiLLT: a new instrument to assess comprehension of spoken language in young children with cerebral palsy and complex communication needs. Augment Altern Comm, 2014; 30(3):252–66. CrossRef

Kline RB. Principles and practice of structural equation modeling. 4th edition, Guilford Publications, New York, NY, 2015.

Koo TK, Li MY. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J Chiropr Med, 2016; 15:155–63. CrossRef

Hogarty KY, Hines CV, Kromrey JD, Ferron JM, Mumford KR. The quality of factor solutions in exploratory factor analysis: the influence of sample size, communality, and over determination. Edu Psychol Meas, 2005; 65:202. CrossRef

Launiala A. How much can a KAP survey tell us about people’s knowledge, attitudes and practices? Some observations from medical anthropology research on malaria in pregnancy in Malawi. Anthropol Matters [Online], 2009; 11:1. Available via www.anthropologymatters.com (Accessed 23 April 2018).

Ledesma RD, Valero-Mora P. Determining the number of facors to retain in EFA: an easy-to-use computer program for carrying out parallel analysis. Pract Assess Res Evaluat [Online], 2007; 12(2). Available via http://pareonline.net/getvn.asp?v=12&n=2 (Accessed 15 May 2018).

Lim CR, Harris K, Dawson J, Beard DJ, Fitzpatrick R, Price AJ. Floor and ceiling effects in the OHS: an analysis of the NHS PROMs data set. BMJ Open, 2015; 5:e007765. CrossRef

Liu J, Isiguzo C, Sieverding M. Differences in malaria care seeking and dispensing outcomes for adults and children attending drug vendors in Nasarawa, Nigeria. Trop Med Int Health, 2015; 20(8):1081–92; CrossRef

Liu Y. Developing a scale to measure the interactivity of websites. J Advert Res, 2003; 6:207–17. CrossRef

Liuzhan J. Analysis of moment structure program application in management and organizational behavior research. J Chem Pharmaceut Res, 2014; 6(6):1940–7.

Lynn MR. Determination and quantification of content validity. Nurs Res, 1986; 35(6):382–5. CrossRef

Müller S, Kohlmann T, Wilke T. Validation of the adherence barriers questionnaire—an instrument for identifying potential risk factors associated with medication-related non-adherence. BMC Health Serv Res, 2015; 15:153. CrossRef

Mundfrom D, Shaw D, Ke T. Minimum sample size recommendations for conducting factor analyses. Intern J Testing, 2005; 5(2):159–68. CrossRef

Muthén LK, Muthén BO. How to use a Monte Carlo study to decide on sample size and determine power. Structural equation modeling. Multidiscip J, 2002; 9:599–620. CrossRef

National Population Commission (NPC) [Nigeria], national malaria control programme (NMCP) [Nigeria], & ICF international. Nigeria malaria indicator survey 2010. 2012 [Online]. Available via http://dhsprogram.com/what-we-do/survey/survey-display-392.cfm (Accessed 16 April 2018).

Nunnally JC, Bernstein IH. Psychometric theory. 3rd edition, McGraw-Hill, New York, NY, p. 736, 1994.

Ofori-Asenso R, Agyeman AA. Irrational use of medicines: a summary of key concepts. Pharmacy, 2016; 4:35. CrossRef

Orimadegun AE, Ilesanmi KS. Mothers’ understanding of childhood malaria and practices in rural communities of Ise-Orun, Nigeria: implications for malaria control. J Fam Med Pri Care, 2015; 4(2):226–31. CrossRef

Parsian N, Dunning T. Developing and validating a questionnaire to measure spirituality: a psychometric process. Glob J Health Sci, 2009; 1(1):2–11. CrossRef

Pearson RH, Mundform DJ. Recommended sample size for conducting exploratory factor analysis on dichotomous data. J Modern Applied Stat Methods, 2010; 9(2):359–68. CrossRef

Peters GJY. The alpha and the omega of scale reliability and validity: Why and how to abandon Cronbach’s alpha and the route towards more comprehensive assessment of scale quality. Eur Health Psycho, 2014; 16(2):56–66.

Raykov T. Estimation of composite reliability for congeneric measures. Applied Psycho Measure, 1997; 21(2):173–84. CrossRef

Reito A, Järvistö A, Jämsen E, Skyttä E, Remes V, Huhtala H, Niemeläinen M, Eskelinen A. Translation and validation of the 12-itemOxford knee score for use in Finland. BMC Musculoskeletal Disorders, 2017; 18:74. CrossRef

Rodrigues IB, Adachi JD, Beattie KA, MacDermid JC. Development and validation of a new tool to measure the facilitators, barriers and preferences to exercise in people with osteoporosis. BMC Musculoskeletal Disorders, 2017; 18:540. CrossRef

Saint-Maurice PF, Welk GJ, Beyler NK, Bartee RT, Heelan KA. Calibration of self-report tools for physical activity research: the Physical Activity Questionnaire (PAQ). BMC Public Health, 2014; 14:461. CrossRef

Sangoseni O, Hellman M, Hill C. Development and validation of a questionnaire to assess the effect of online learning on behaviors, attitude and clinical practices of physical therapists in United States regarding of evidence-based practice. Internet J Allied Health Sci Prac, 2013; 11:1–12.

Shapiro A. Statistical inference of moment structures. In: Handbook of latent variable and related models. Elsevier, North Holland, pp. 229–60, 2007. CrossRef

Shook CL, Ketchen DJ, Hult GTM, Kacmar KM. An assessment of the use of structural equation modeling in strategic management research. Strategic Manage J, 2004; 25(4):397–404. CrossRef

Sivo SA, Xitao F, Witta EL, Willse JT. The search for “optimal” cutoff properties: fit index criteria in structural equation modeling. J Exptal Edu, 2006;74:267–88. CrossRef

Stephenson MT, Holbert RL. A Monte Carlo simulation of observable versus latent variable structural equation modeling techniques. Comm Res, 2003; 30(3):332–54. CrossRef

Suhr D. Exploratory or confirmatory factor analysis? In Statistics and data analysis. 31st Annual SAS Users Group International, SAS Institute Inc., Cary, NC, 2006.

Tabachnick BG, Fidell LS. Using multivariate statistics. 5th edition, Allyn & Bacon, Boston, MA, 2007.

Terwee CB, Bot SD, de Boer MR, van der Windt DA, Knol DL, Dekker J, Bouter LM, de Vet HC. Quality criteria were proposed for measurement properties of health status questionnaires. J Clin Epidemiol, 2007; 60(1):34–42. CrossRef

Thoemmes F, MacKinnon D, Reiser M. Power analysis for complex mediational designs using Monte Carlo methods. Struct Equat Model, 2010; 17:510–34. CrossRef

Uchenna AP, Johnbull OS, Chinonye EE, Christopher OT, Nonye AP. The knowledge, attitude and practice of universal precaution among rural primary healthcare workers in Enugu south-east Nigeria. World J Pharm Pharm Sci, 2015; 4(9):109–25.

Van Cauwenberg J, Van Holle V, De Bourdeaudhuij I, Owen N, Deforche B. Older adults’ reporting of specific sedentary behaviors: validity and reliability. BMC Public Health, 2014; 14:734. CrossRef

Van Der Eijk C, Rose J. Risky business: factor analysis of survey data: assessing the probability of incorrect dimensionalisation. PLoS One, 2015; 10:3. CrossRef

Vaz S, Falkmer T, Passmore AE, Parsons R, Andreou P. The case for using the repeatability coefficient when calculating test–retest reliability. PLoS One, 2013; 8(9):e73990. CrossRef

Wolf EJ, Harrington KM, Clark SL, Miller MW. Sample size requirements for structural equation models: an evaluation of power, bias, and solution propriety. Edu Psycho Measure, 2013; 73(6):913–34. CrossRef

World Health Organization (WHO). Guidelines for the treatment of malaria. 3rd edition, WHO, Geneva, Switzerland, 2015.

Wong KL, Ong SF, Kuek TY. Constructing a survey questionnaire to collect data on service quality of business academics. Eur J Soc Sci, 2012; 29(2):209–21.

Worthington RL, Whittaker TA. Scale development research: a content analysis and recommendations for best practices. Counsel Psychol, 2006; 34(6):806–38. CrossRef

Yin X, Tu X, Tong Y, Yang R, Wang Y, Cao S, Fan H, Wang F, Gong Y, Yin P, Lu Z. Development and validation of a tuberculosis medication adherence scale. PLoS One, 2012; 7(12):e50328. CrossRef

Zamanzadeh V, Ghahramanian A, Rassouli M, Abbaszadeh A, Alavi-Majd H, Nikanfar AR. Design and implementation content validity study: development of an instrument for measuring patient-centered communication. J Caring Sci, 2015; 4(2):165–78. CrossRef